AI in Healthcare: Navigating the Legal Minefield as Adoption Soars

Artificial intelligence (AI) is rapidly transforming healthcare, offering unprecedented opportunities for improved diagnostics, personalized treatment plans, and streamlined operations. However, this technological revolution isn't without its challenges. As AI’s presence in hospitals, clinics, and research labs expands, so too do the complex legal questions surrounding its use. Experts are sounding the alarm, highlighting a growing need for clarity and robust legal frameworks to address potential liabilities.

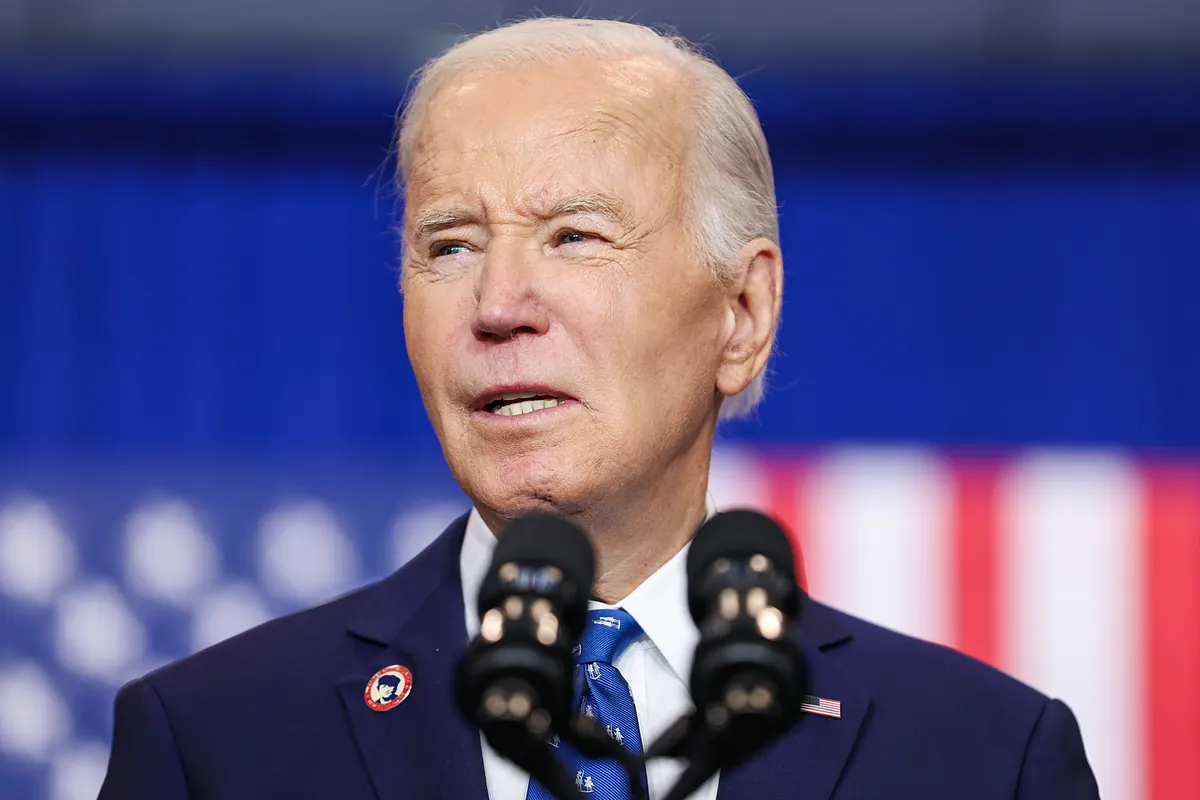

At the recent #STATBreakthrough event, leading legal minds and healthcare professionals convened to discuss these emerging issues. The central concern revolves around accountability when AI systems make errors or cause harm. Who is responsible when an AI-powered diagnostic tool misdiagnoses a patient, or when an automated surgical system malfunctions? Is it the hospital, the AI developer, the clinician using the system, or a combination of all three?

The Blurring of Responsibility

The traditional model of medical malpractice, where a human doctor is held accountable for negligence, is proving inadequate in the age of AI. AI systems operate based on algorithms and data, making it difficult to pinpoint the source of an error. Was the error due to flawed data used to train the AI, a bug in the algorithm, or improper implementation by the healthcare provider?

“We’re entering uncharted territory,” explained Dr. Eleanor Vance, a medical ethicist and legal expert. “The complexity of AI systems makes it difficult to establish causation and determine liability. We need to develop new legal standards that reflect the unique nature of AI-driven healthcare.”

Key Legal Considerations

- Data Bias: AI algorithms are only as good as the data they are trained on. If the data is biased—for example, underrepresenting certain demographic groups—the AI system may perpetuate and even amplify those biases, leading to unequal or harmful outcomes.

- Transparency and Explainability: Many AI systems, particularly deep learning models, are “black boxes,” meaning it's difficult to understand how they arrive at their conclusions. This lack of transparency makes it challenging to identify errors and ensure accountability.

- Regulatory Framework: Current regulations governing medical devices and healthcare practices do not adequately address the specific challenges posed by AI. There is a pressing need for updated regulations that provide clear guidance on the development, deployment, and use of AI in healthcare.

- Cybersecurity Risks: AI systems are vulnerable to cyberattacks, which could compromise patient data or manipulate the AI’s decision-making process. Robust cybersecurity measures are essential to protect against these risks.

- Informed Consent: Patients should be informed when AI is being used in their care and given the opportunity to consent to its use. This includes explaining the potential benefits and risks of AI-powered treatments.

Moving Forward: A Collaborative Approach

Addressing these legal challenges requires a collaborative effort involving healthcare providers, AI developers, legal experts, regulators, and policymakers. Developing clear guidelines and legal frameworks will be crucial to fostering trust in AI and ensuring its responsible adoption in healthcare. This includes establishing robust auditing processes, promoting transparency and explainability, and addressing data bias proactively. Furthermore, ongoing education and training for healthcare professionals on the ethical and legal implications of AI are paramount.

The potential benefits of AI in healthcare are undeniable. However, realising this potential requires a thoughtful and proactive approach to navigating the legal and ethical complexities that lie ahead. Failing to do so could stifle innovation and undermine patient safety.